- Integration Patterns and Decision Framework

- Solution Decision Framework

- Requirements

- Solution Pattern Catalogue

- Solution Pattern Name: Manual-Internal

- Solution Pattern Name: Manual-External

- Solution Pattern Name: Manual-Cloud

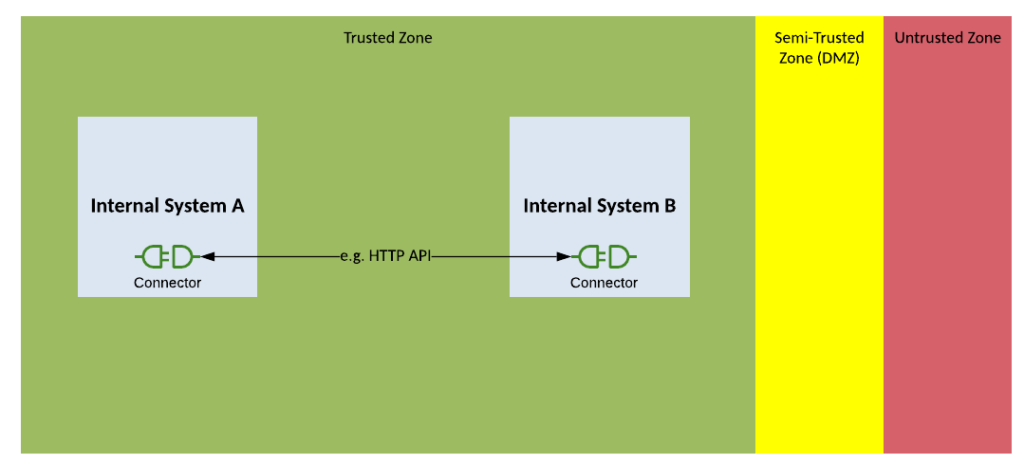

- Solution Pattern Name: API-Internal

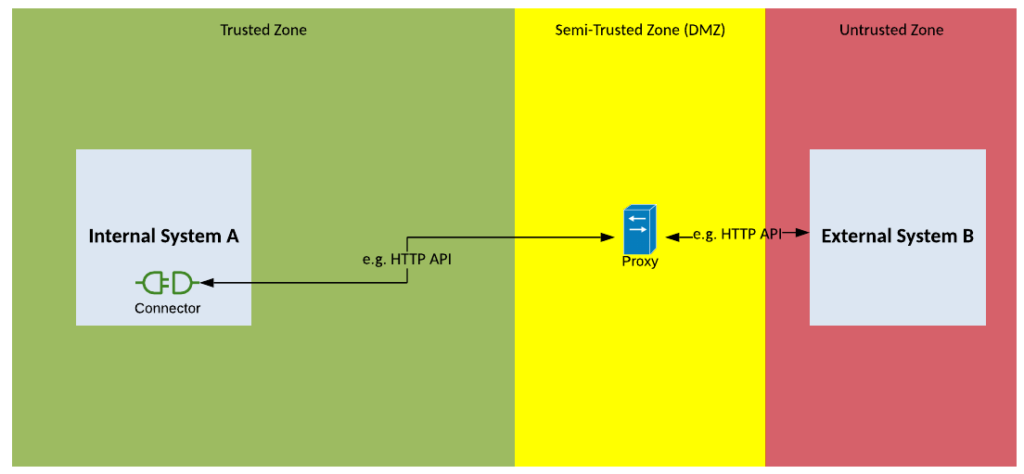

- Solution Pattern Name: API-External

- Solution Pattern Name: Middleware-Internal

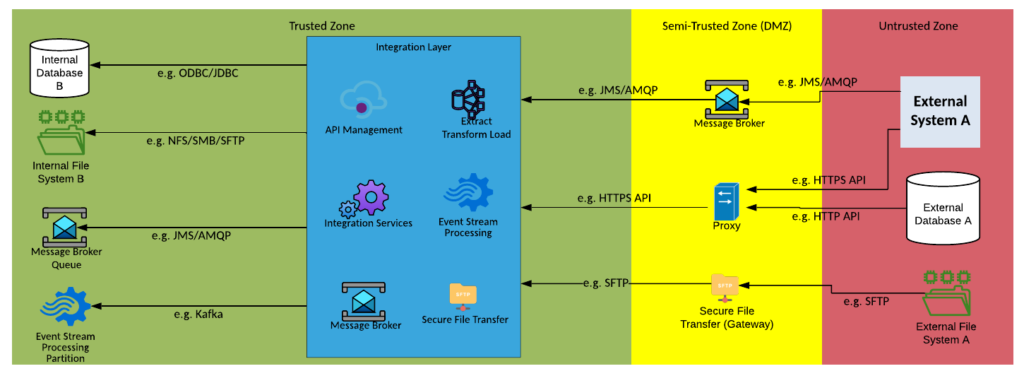

- Solution Pattern Name: Middleware-External

- Solution Pattern Name: Middleware-Cloud

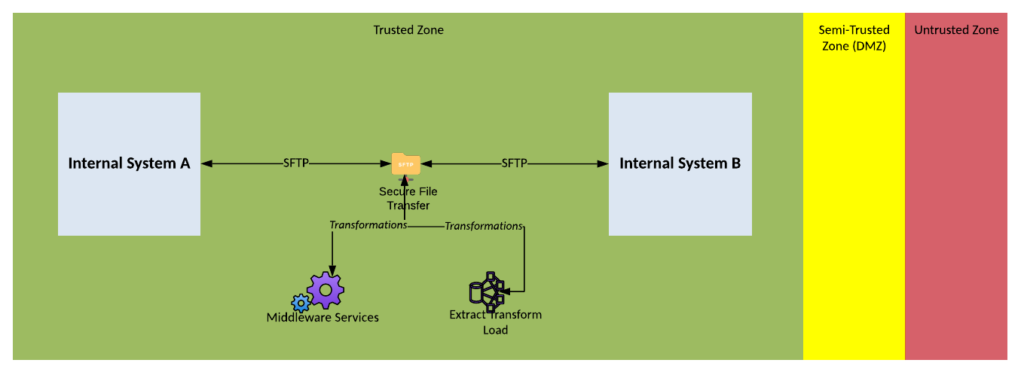

- Solution Pattern Name: FileTransfer-Internal

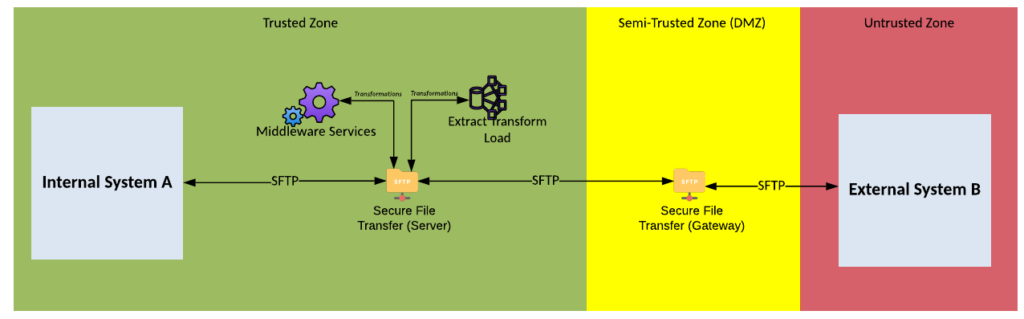

- Solution Pattern Name: FileTransfer-External

- Solution Pattern Name: FileTransfer-Cloud

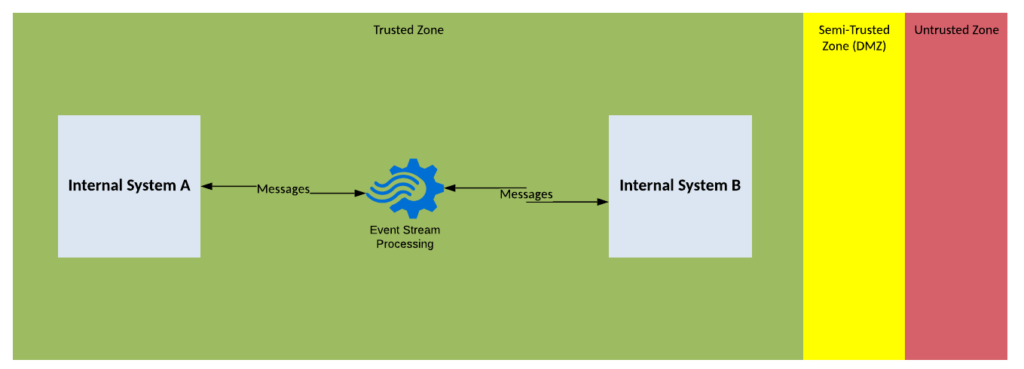

- Solution Pattern Name: ESP-Internal

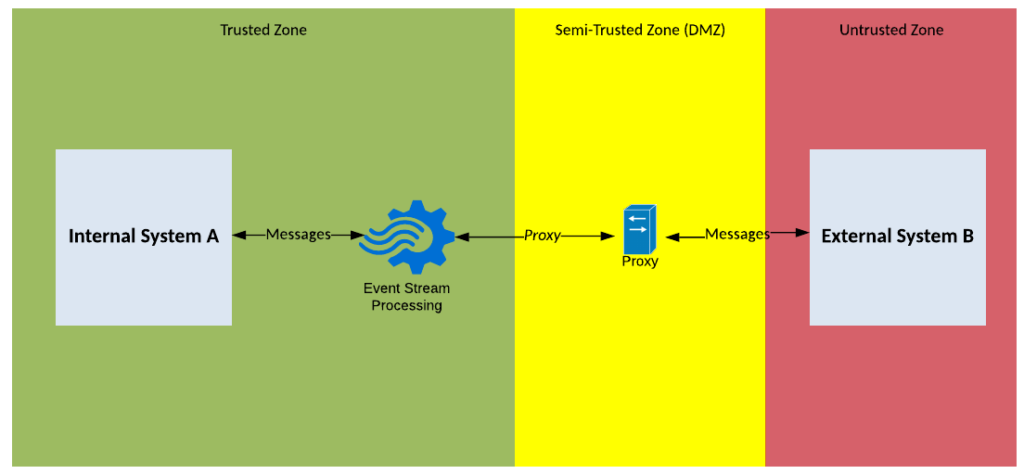

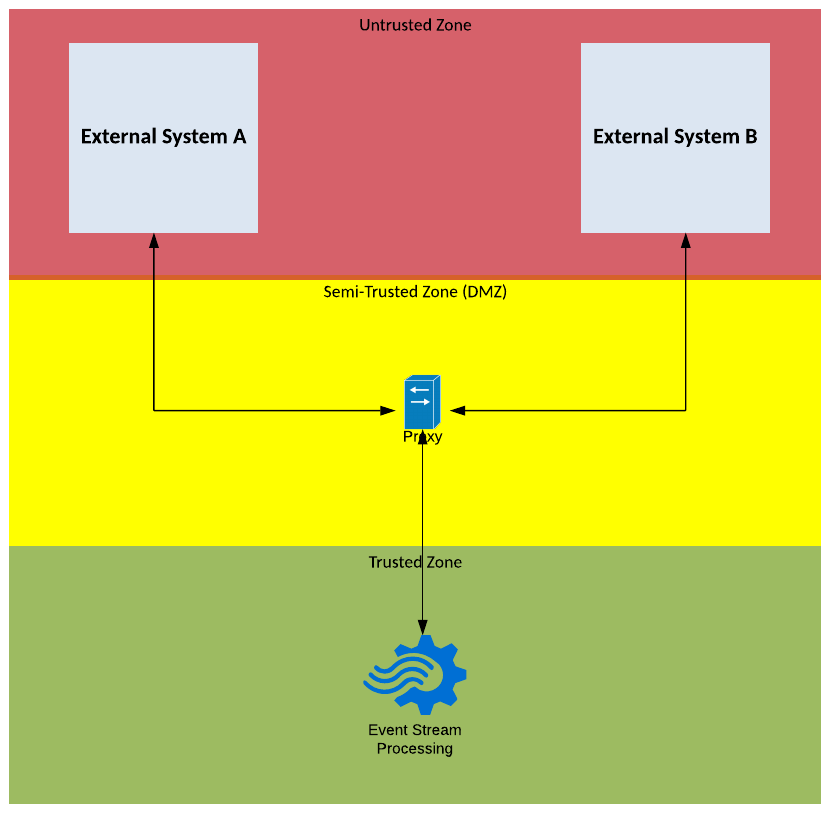

- Solution Pattern Name: ESP-External

- Solution Pattern Name: ESP-Cloud

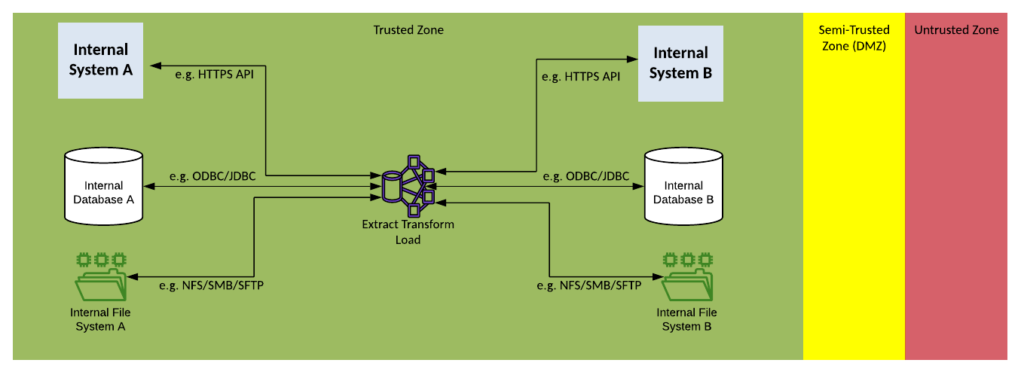

- Solution Pattern Name: ETL-Internal

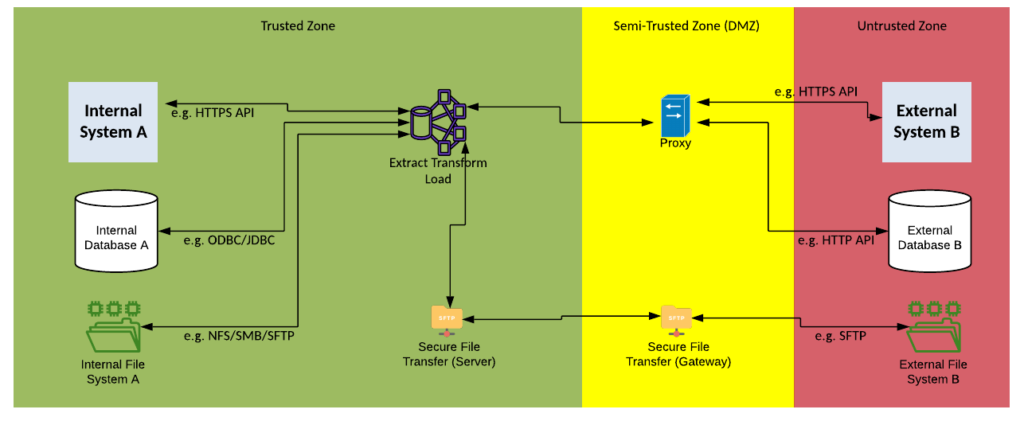

- Solution Pattern Name: ETL-External

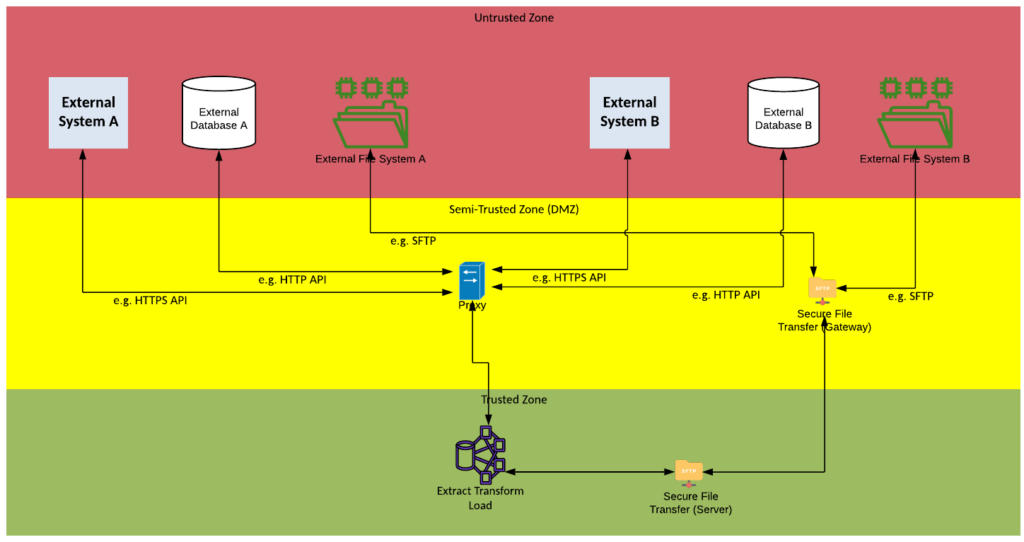

- Solution Pattern Name: ETL-Cloud

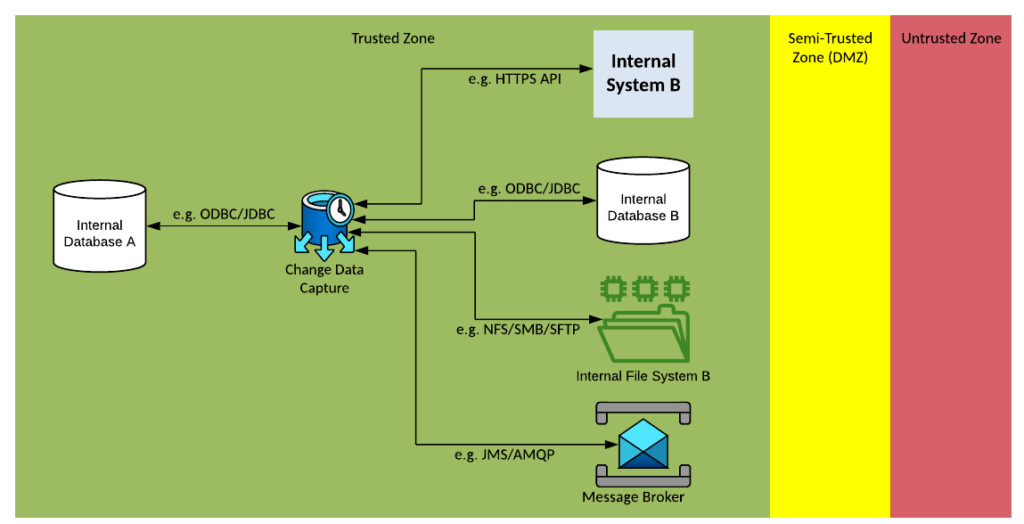

- Solution Pattern Name: CDC-Internal

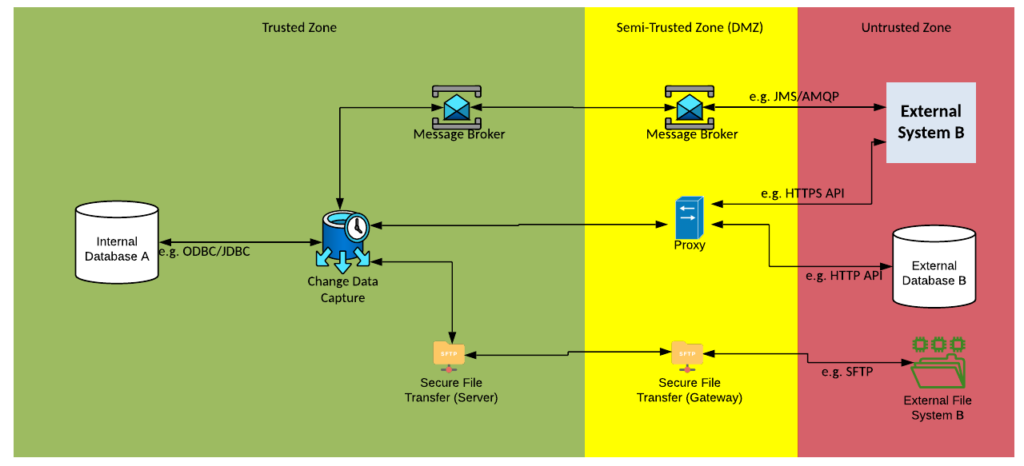

- Solution Pattern Name: CDC-External

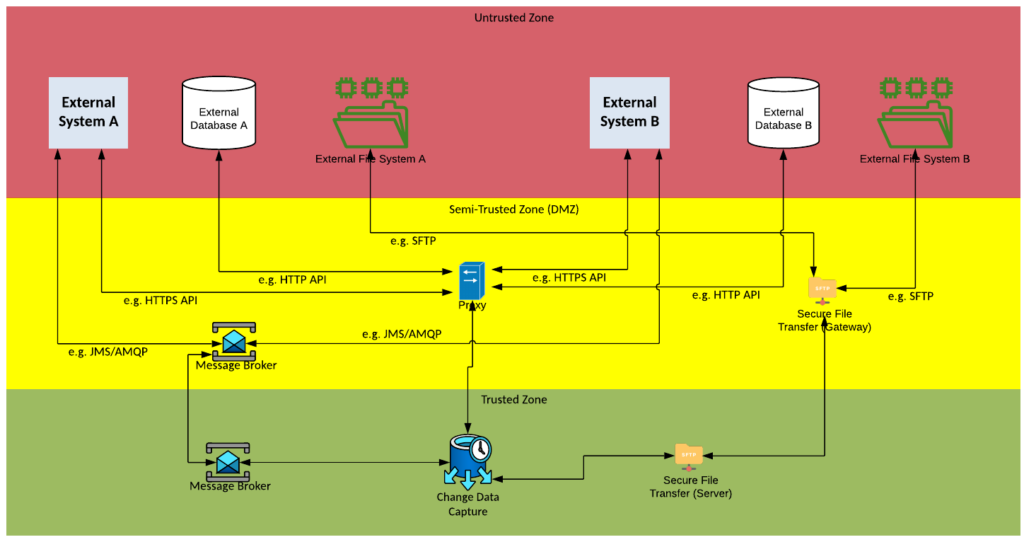

- Solution Pattern Name: CDC-Cloud

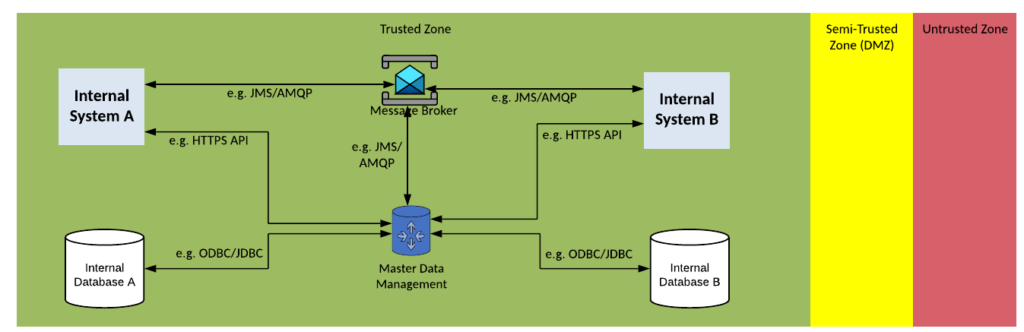

- Solution Pattern Name: MDM-Internal

- Solution Pattern Name: MDM-External

- Solution Pattern Name: MDM-Cloud

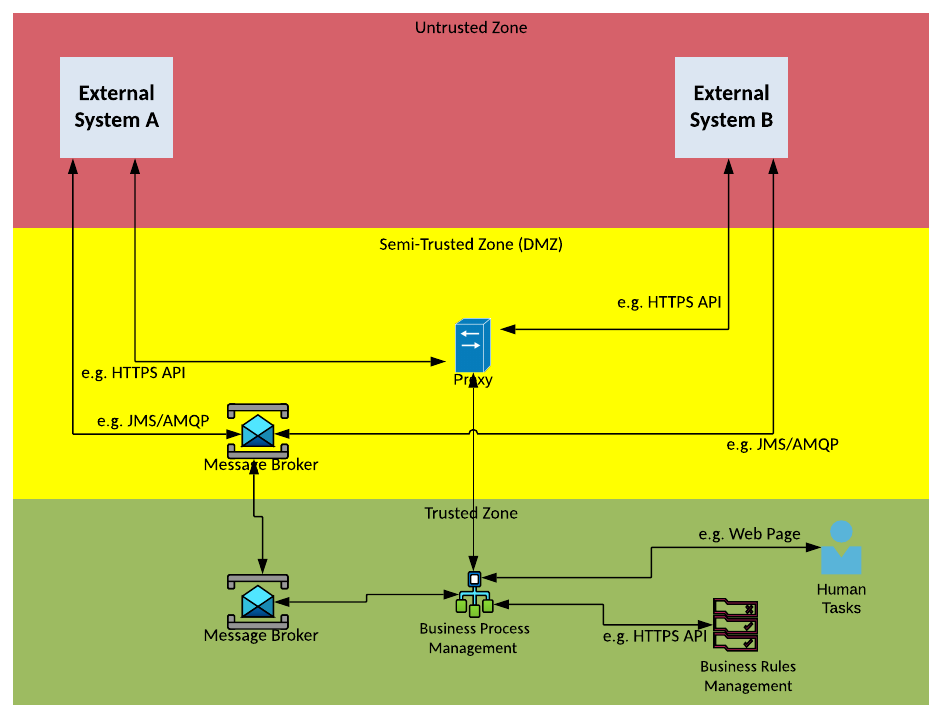

- Solution Pattern Name: Workflow-Internal

- Solution Pattern Name: Workflow-External

- Solution Pattern Name: Workflow-Cloud

- Solution Pattern Name: Native-Internal

- Solution Pattern Name: Native-External

- Solution Pattern Name: Native-Cloud

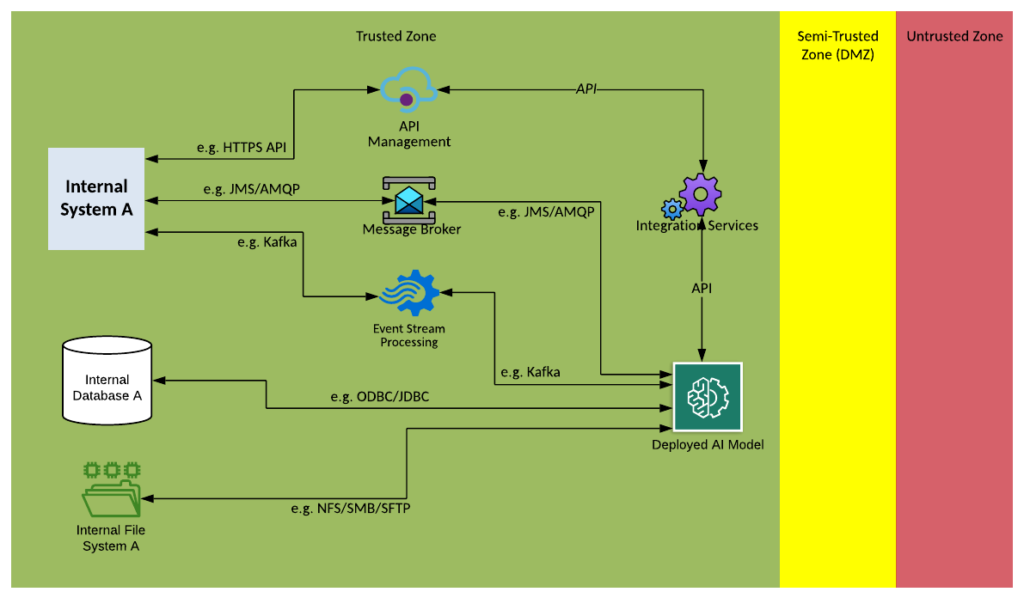

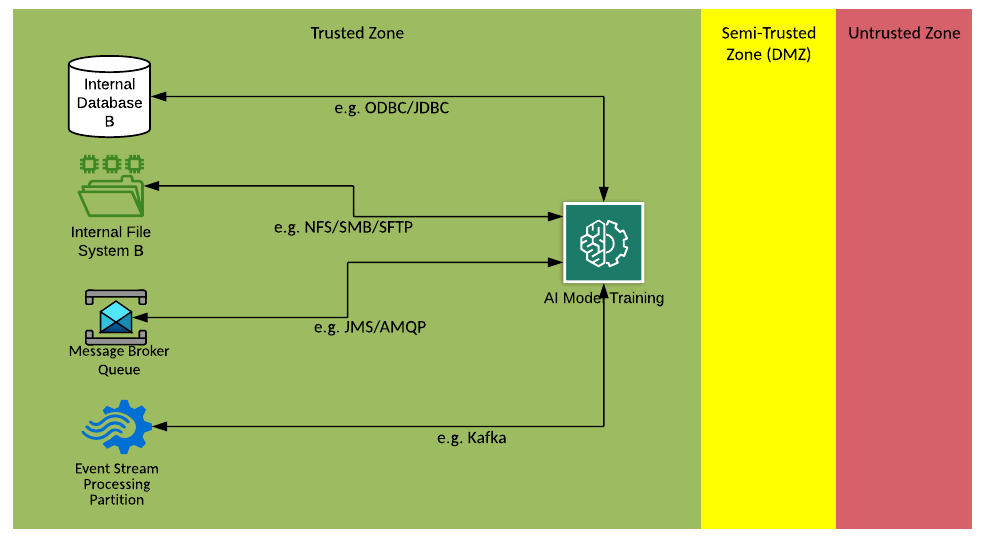

- Solution Pattern Name: AIML-Internal

- Solution Pattern Name: AIML-External

Solution Decision Framework

Software engineering has traditionally used software design patterns as reusable solutions to a commonly occurring problems. The Enterprise Integration Patterns (https://www.enterpriseintegrationpatterns.com/) are well known in the integration domain.

This solution decision framework guides an architect in selecting the most appropriate end to end solution patterns that make use of the integration platform components to solve common integration scenarios.

To determine the most appropriate integration solution pattern and platform components to meet the business requirement follow the solution decision framework below.

- Requirements – Basic integration requirements must be captured to select the correct pattern.

- Reuse – Check if there is an existing integration service that can satisfy the requirements

- Boundaries – Across which boundaries will the integration take place?

- Interaction – What style of interaction is required?

- Platform – What is the most appropriate platform component to implement the solution?

- Solution – Select an approved patterned based on the analysis.

Requirements

The following high-level integration requirements must be considered when analysing an integration solution:

- Purpose of the service. What real world effect will the required service have in business terms? E.g., Send a Purchase Order to a supplier electronically.

- What Business Function/Process/Capability does the integration enable or orchestrate? E.g., Procurement.

- What Information Objects are exchanged by the integration? Objects can be selected from a common vocabulary or Enterprise Information Model. E.g., Customer

- What Volume and frequency data that will pass through the integration? E.g., Daily Batch of 10,000 records, 1 request per minute per device

- The number of Consumer that may consume this integration. E.g., 500 field workers

- How many Provider systems may provide data for this integration. E.g. Customer data comes from two CRM systems.

- What will trigger the service and when. E.g., Real-time on Employee update, or daily batch extract of all employee changes at 9 PM.

- Determine where service providers and consumers are located. Will the integration cross and boundaries? E.g., internal systems, external systems.

- How will service consumers and providers interact: Request/Reply, Publish Subscribe, Batch. This will be covered in detail in section Interaction.

- What integration technologies do the service consumers and providers support: Do they support messaging like HTTP or JMS? Do they have native integration plugins which accelerate integration e.g., Salesforce Adapter.

- What is the Data classification of data exchanged? This will drive the security controls required.

If the above high-level integration requirements are not known at the time of analyse then there is a risk of rework due to an incorrect pattern being selected. Implementation estimates should be increased to reflect the level of uncertainty.

Reuse

An API and Service Catalogue is a structured repository or database that contains detailed information about the various APIs and services available within an organization or across multiple systems.

Search the API and Service Catalogue for existing services which can be reused to meet the integration requirements captured above.

If a service is found which partial meets the requirements investigate creating a new version of the service which is expanded to meet the new requirements. If no suitable service can be found continue the solution framework to determine the recommended end to end solution pattern for the new service.

Boundaries

The boundaries that the integration crosses must be understood. Please choose an interaction pattern from the table below.

| Title | Description | Context Diagram |

|---|---|---|

| Subsystem | Between components within a system |  |

| Internal | Between internal systems within the same organisation (the application is hosted on a network controlled by the organisation) |  |

| External | With an external system (the application is hosted on a network not controlled by the organisation e.g. SaaS applications) |  |

| Cloud | Between external systems (Cloud to cloud) e.g., ServiceNow to Salesforce |  |

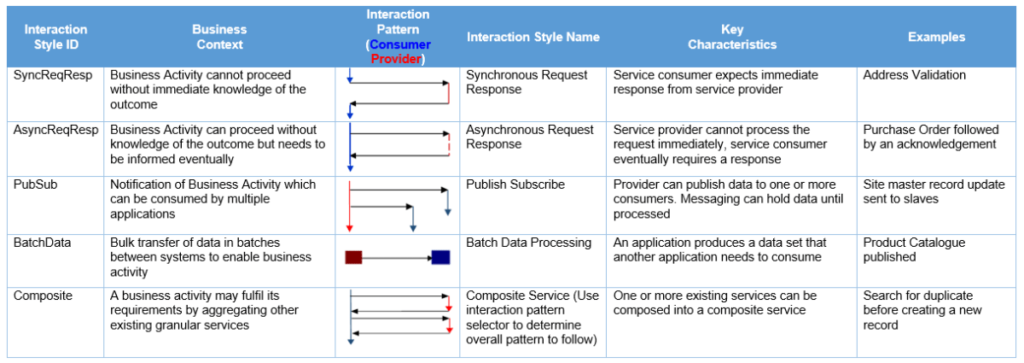

Interaction

The integration interaction style between consumers and providers must be understood. Please choose an interaction styles from the table below. The overall end to end interaction style should be considered as per the requirements captured above. Composite interactions are one or more of the interactions styles aggregated together.

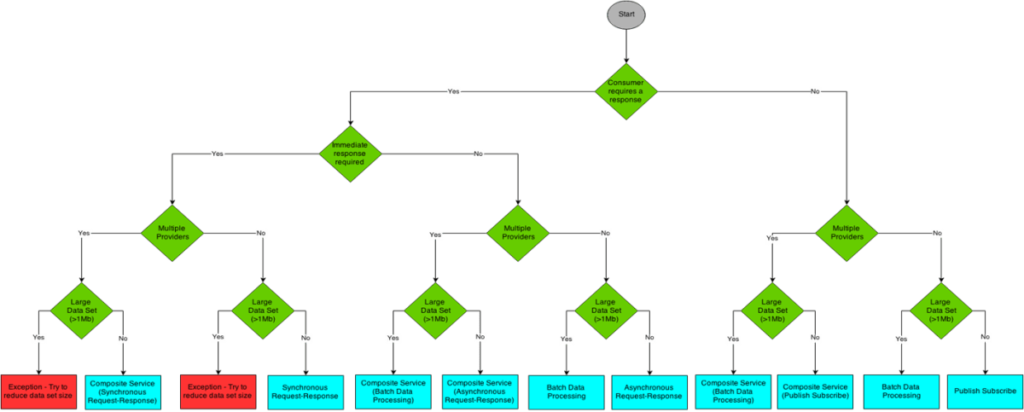

Use the Interaction Style selection tree below to determine the appropriate interaction style:

Integration Platform Component

Use the component list below to match the business requirements of the new integration service with the key characteristics of each Integration Platform component to determine the appropriate platform to implement the integration solution.

- Manual – (Manual Process) – A human can perform the integration with a manual process. The manual process can meet the service level requirements. The integration involves a small number of low frequency transactions e.g., weekly data extract. The cost of the manual process is far less than the cost of creating and supporting an automated service for the lifetime of the service

- Middleware – (Middleware Services) – The integration must be performed in near real-time. The integration requires asynchronous messaging e.g., a message is asynchronously passed from a producer to a consumer via a topic or queue.The integration may require mediation from one protocol to another e.g., HTTP to JMS. The integration may require data transformation from one format to another e.g., JSON to CSV. The integration needs to be routed based on rules or content of the transaction.

- APIM – (API Management)-The integration involves HTTP web API like REST or SOAP. The API requires to be applied to protect the backend systems. Examples of policies include Performance policies (throttling number of API calls per consumer or provider system), Security policies (verify a consumer’s identity before allowing access to API functionality)

- ETL – (Extract Transform Load) – The integration is performed in batches. The integration extracts large sets of data from one datastore and makes it available in another datastore. The integration may require some transformation between datastores. The integration needs to be resilient and able to resume if interrupted

- CDC – (Change Data Capture) – The integration requires the changes made in one system’s datastore be made available to other systems. The integration only requires data that is changed. The changes need to be made available in near real-time or small batches typically less than 15-minute windows.The system may be a legacy system which the organisation does not want to invest in.

- SFT – (Secure File Transfer) – The integration requires files to be transferred between systems. The integration needs to be resilient and able to resume if interrupted.

- MDM – (Master Data Management) – The organisation’s reference data needs to be managed in a system which provides a single point of reference. There are four major patterns for storing reference data in an MDM system: Registry – a central registry links records across various source systems; Consolidation – data is taken from multiple source system to create a central golden copy; Coexistence – data is synchronised across multiple system to keep all system aligned to the golden record; Centralized – the golden record is authored in the MDM system and pushed out to systems. There are three main patterns for integrating MDM systems with business systems: Data consolidation –source systems push their changes to the MDM to create the golden record; Data federation – system query the MDM which returns the golden record data; Data propagation – the golden record is synchronised with interested system.

- ESP – (Event Stream Processing) – The integration requires events to be captured from sources at extremely high frequency e.g., several thousand messages per second. These events need to be queried, filtered, aggregated, enriched, or analysed. The events are to be retained for a period in a message log so they can be replayed. Event consumers maintain their own state and request the record they are up to.

- Workflow – (Workflow)-A business process exists which can be automated by breaking the process up into documents, information or tasks that can be passed between the participants of the business process and tracked. The process can be modelled and executed in a Workflow component such as Business Process Management (BPM) tool. Multiple interfaces or applications require the same decision logic to be defined and executed, this can be centrally managed in Business Rule Management System (BRMS) and invoked via an API call. In more recent microservices architectures there has been a shift away from services being orchestrated by a single component such as a BPM tool invoking multiple services as it can become a bottleneck which is difficult to update. Microservices architectures typically implement independent microservices that perform their part of the business process in insolation by reacting to events that are published this is called Choreography, this is a more scalable and adaptable architecture, however this requires supporting services like distributed logging and tracing.

- AIML – (Artificial Intelligence / Machine Learning) – Artificial intelligence models exist that need to be trained with data flowing through the integration platform. The nominated data can be captured and sent to the artificial intelligence model for training. Once trained the model can be invoked at runtime to make intelligent decisions.

- Native – (Native integration) – Some Enterprise Applications and SaaS software come with native integration capabilities, these often provide a set of supported integration with other applications or common technologies e.g., Identity providers. Native integration should be considered when it provides secure, accelerated and support integration with other software that would be far more complex if implemented on an integration platform component.

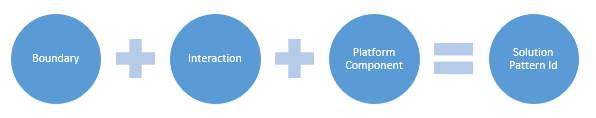

Solution Selection

After capturing the high-level requirements of the Integration solution, verifying there is no existing service which can be reused and a new integration component may need to be built.

Using a combination of the name of the integration boundaries, interaction style and integration method selected above to determine the appropriate End to End Integration pattern.

For example, an interface has the following responses to the decision framework:

| Boundary | Interaction | Platform Component | Solution Id |

| Internal | SyncReqResp | Middleware | Internal-SyncReqResp-Middleware |

The Solution Id would be Internal-SyncReqResp-Middleware.

The table below contains a full list of end to end solution pattern. Using the decision framework above to determine the solution details and map them to a solution pattern name. The solution pattern selected may not be a recommended combination, the list will provide alternative recommendations. Details for each Solution pattern can be found in the next section.

| Boundary | Interaction | Platform Component | Solution Id | Solution Pattern Name | Comments |

|---|---|---|---|---|---|

| SubSystem | SyncReqResp | Manual | SubSystem-SyncReqResp-Manual | N/A | Not valid for synchronous interactions |

| SubSystem | SyncReqResp | Middleware | SubSystem-SyncReqResp-Middleware | Middleware-Internal | |

| SubSystem | SyncReqResp | APIM | SubSystem-SyncReqResp-APIM | API-Internal | |

| SubSystem | SyncReqResp | ETL | SubSystem-SyncReqResp-ETL | N/A | Not valid for synchronous interactions |

| SubSystem | SyncReqResp | CDC | SubSystem-SyncReqResp-CDC | N/A | Not valid for synchronous interactions |

| SubSystem | SyncReqResp | FileTransfer | SubSystem-SyncReqResp-FileTransfer | N/A | Not valid for synchronous interactions |

| SubSystem | SyncReqResp | MDM | SubSystem-SyncReqResp-MDM | MDM-Internal | |

| SubSystem | SyncReqResp | ESP | SubSystem-SyncReqResp-ESP | ESP-Internal | Asynchronous processing is preferred for ESP, a synchronous API using middleware may be better solution |

| SubSystem | SyncReqResp | Workflow | SubSystem-SyncReqResp-Workflow | Workflow-Internal | |

| SubSystem | SyncReqResp | AIML | SubSystem-SyncReqResp-AIML | AIML-Internal | |

| SubSystem | SyncReqResp | Native | SubSystem-SyncReqResp-Native | Native-Internal | |

| SubSystem | AsyncReqResp | Manual | SubSystem-AsyncReqResp-Manual | Manual-Internal | |

| SubSystem | AsyncReqResp | Middleware | SubSystem-AsyncReqResp-Middleware | Middleware-Internal | |

| SubSystem | AsyncReqResp | APIM | SubSystem-AsyncReqResp-APIM | API-Internal | |

| SubSystem | AsyncReqResp | ETL | SubSystem-AsyncReqResp-ETL | N/A | Consider managing data between components in the same application in the application services |

| SubSystem | AsyncReqResp | CDC | SubSystem-AsyncReqResp-CDC | N/A | Consider managing data between components in the same application in the application services |

| SubSystem | AsyncReqResp | FileTransfer | SubSystem-AsyncReqResp-FileTransfer | N/A | Consider managing data between components in the same application in the application services |

| SubSystem | AsyncReqResp | MDM | SubSystem-AsyncReqResp-MDM | MDM-Internal | |

| SubSystem | AsyncReqResp | ESP | SubSystem-AsyncReqResp-ESP | ESP-Internal | |

| SubSystem | AsyncReqResp | Workflow | SubSystem-AsyncReqResp-Workflow | Workflow-Internal | |

| SubSystem | AsyncReqResp | AIML | SubSystem-AsyncReqResp-AIML | AIML-Internal | |

| SubSystem | AsyncReqResp | Native | SubSystem-AsyncReqResp-Native | Native-Internal | |

| SubSystem | PubSub | Manual | SubSystem-PubSub-Manual | Manual-Internal | |

| SubSystem | PubSub | Middleware | SubSystem-PubSub-Middleware | Middleware-Internal | |

| SubSystem | PubSub | APIM | SubSystem-PubSub-APIM | API-Internal | |

| SubSystem | PubSub | ETL | SubSystem-PubSub-ETL | N/A | Not valid for publish subscribe interactions, consider middleware or CDC |

| SubSystem | PubSub | CDC | SubSystem-PubSub-CDC | CDC-Internal | |

| SubSystem | PubSub | FileTransfer | SubSystem-PubSub-FileTransfer | N/A | Not valid for publish subscribe interactions |

| SubSystem | PubSub | MDM | SubSystem-PubSub-MDM | MDM-Internal | |

| SubSystem | PubSub | ESP | SubSystem-PubSub-ESP | ESP-Internal | |

| SubSystem | PubSub | Workflow | SubSystem-PubSub-Workflow | Workflow-Internal | |

| SubSystem | PubSub | AIML | SubSystem-PubSub-AIML | AIML-Internal | |

| SubSystem | PubSub | Native | SubSystem-PubSub-Native | Native-Internal | |

| SubSystem | BatchData | Manual | SubSystem-BatchData-Manual | Manual-Internal | |

| SubSystem | BatchData | Middleware | SubSystem-BatchData-Middleware | Middleware-Internal | |

| SubSystem | BatchData | APIM | SubSystem-BatchData-APIM | API-Internal | API must be designed carefully when dealing with large data sets, consider paging the data and throttling limits |

| SubSystem | BatchData | ETL | SubSystem-BatchData-ETL | ETL-Internal | |

| SubSystem | BatchData | CDC | SubSystem-BatchData-CDC | CDC-Internal | Consider small batches |

| SubSystem | BatchData | FileTransfer | SubSystem-BatchData-FileTransfer | FileTransfer-Internal | |

| SubSystem | BatchData | MDM | SubSystem-BatchData-MDM | MDM-Internal | |

| SubSystem | BatchData | ESP | SubSystem-BatchData-ESP | ESP-Internal | Event stream is not valid for large data sets the data needs to either be broken up or references to the location of the passed |

| SubSystem | BatchData | Workflow | SubSystem-BatchData-Workflow | Workflow-Internal | |

| SubSystem | BatchData | AIML | SubSystem-BatchData-AIML | AIML-Internal | |

| SubSystem | BatchData | Native | SubSystem-BatchData-Native | Native-Internal | |

| Internal | SyncReqResp | Manual | Internal-SyncReqResp-Manual | N/A | Not valid for synchronous interactions |

| Internal | SyncReqResp | Middleware | Internal-SyncReqResp-Middleware | Middleware-Internal | |

| Internal | SyncReqResp | APIM | Internal-SyncReqResp-APIM | API-Internal | |

| Internal | SyncReqResp | ETL | Internal-SyncReqResp-ETL | N/A | Not valid for synchronous interactions |

| Internal | SyncReqResp | CDC | Internal-SyncReqResp-CDC | N/A | Not valid for synchronous interactions |

| Internal | SyncReqResp | FileTransfer | Internal-SyncReqResp-FileTransfer | N/A | Not valid for synchronous interactions |

| Internal | SyncReqResp | MDM | Internal-SyncReqResp-MDM | MDM-Internal | |

| Internal | SyncReqResp | ESP | Internal-SyncReqResp-ESP | ESP-Internal | Asynchronous processing is preferred for ESP, a synchronous API using middleware may be better solution |

| Internal | SyncReqResp | Workflow | Internal-SyncReqResp-Workflow | Workflow-Internal | |

| Internal | SyncReqResp | AIML | Internal-SyncReqResp-AIML | AIML-Internal | |

| Internal | SyncReqResp | Native | Internal-SyncReqResp-Native | Native-Internal | |

| Internal | AsyncReqResp | Manual | Internal-AsyncReqResp-Manual | Manual-Internal | |

| Internal | AsyncReqResp | Middleware | Internal-AsyncReqResp-Middleware | Middleware-Internal | |

| Internal | AsyncReqResp | APIM | Internal-AsyncReqResp-APIM | API-Internal | |

| Internal | AsyncReqResp | ETL | Internal-AsyncReqResp-ETL | ETL-Internal | Consider middleware over ETL for asynchronous interactions |

| Internal | AsyncReqResp | CDC | Internal-AsyncReqResp-CDC | N/A | Not valid for asynchronous request reply, consider middleware |

| Internal | AsyncReqResp | FileTransfer | Internal-AsyncReqResp-FileTransfer | FileTransfer-Internal | |

| Internal | AsyncReqResp | MDM | Internal-AsyncReqResp-MDM | MDM-Internal | |

| Internal | AsyncReqResp | ESP | Internal-AsyncReqResp-ESP | ESP-Internal | |

| Internal | AsyncReqResp | Workflow | Internal-AsyncReqResp-Workflow | Workflow-Internal | |

| Internal | AsyncReqResp | AIML | Internal-AsyncReqResp-AIML | AIML-Internal | |

| Internal | AsyncReqResp | Native | Internal-AsyncReqResp-Native | Native-Internal | |

| Internal | PubSub | Manual | Internal-PubSub-Manual | Manual-Internal | |

| Internal | PubSub | Middleware | Internal-PubSub-Middleware | Middleware-Internal | |

| Internal | PubSub | APIM | Internal-PubSub-APIM | API-Internal | |

| Internal | PubSub | ETL | Internal-PubSub-ETL | N/A | Not valid for publish subscribe interactions, consider middleware or CDC |

| Internal | PubSub | CDC | Internal-PubSub-CDC | CDC-Internal | |

| Internal | PubSub | FileTransfer | Internal-PubSub-FileTransfer | N/A | Not valid for publish subscribe interactions |

| Internal | PubSub | MDM | Internal-PubSub-MDM | MDM-Internal | |

| Internal | PubSub | ESP | Internal-PubSub-ESP | ESP-Internal | |

| Internal | PubSub | Workflow | Internal-PubSub-Workflow | Workflow-Internal | |

| Internal | PubSub | AIML | Internal-PubSub-AIML | AIML-Internal | |

| Internal | PubSub | Native | Internal-PubSub-Native | Native-Internal | |

| Internal | BatchData | Manual | Internal-BatchData-Manual | Manual-Internal | |

| Internal | BatchData | Middleware | Internal-BatchData-Middleware | Middleware-Internal | |

| Internal | BatchData | APIM | Internal-BatchData-APIM | API-Internal | API must be designed carefully when dealing with large data sets, consider paging the data and throttling limits |

| Internal | BatchData | ETL | Internal-BatchData-ETL | ETL-Internal | |

| Internal | BatchData | CDC | Internal-BatchData-CDC | CDC-Internal | Consider small batches |

| Internal | BatchData | FileTransfer | Internal-BatchData-FileTransfer | FileTransfer-Internal | |

| Internal | BatchData | MDM | Internal-BatchData-MDM | MDM-Internal | |

| Internal | BatchData | ESP | Internal-BatchData-ESP | ESP-Internal | Event stream is not valid for large data sets the data needs to either be broken up or references to the location of the passed |

| Internal | BatchData | Workflow | Internal-BatchData-Workflow | Workflow-Internal | |

| Internal | BatchData | AIML | Internal-BatchData-AIML | AIML-Internal | |

| Internal | BatchData | Native | Internal-BatchData-Native | Native-Internal | |

| External | SyncReqResp | Manual | External-SyncReqResp-Manual | N/A | Not valid for synchronous interactions |

| External | SyncReqResp | Middleware | External-SyncReqResp-Middleware | Middleware-External | |

| External | SyncReqResp | APIM | External-SyncReqResp-APIM | API-External | |

| External | SyncReqResp | ETL | External-SyncReqResp-ETL | N/A | Not valid for synchronous interactions |

| External | SyncReqResp | CDC | External-SyncReqResp-CDC | N/A | Not valid for synchronous interactions |

| External | SyncReqResp | FileTransfer | External-SyncReqResp-FileTransfer | N/A | Not valid for synchronous interactions |

| External | SyncReqResp | MDM | External-SyncReqResp-MDM | MDM-External | |

| External | SyncReqResp | ESP | External-SyncReqResp-ESP | ESP-External | |

| External | SyncReqResp | Workflow | External-SyncReqResp-Workflow | Workflow-External | |

| External | SyncReqResp | AIML | External-SyncReqResp-AIML | AIML-External | |

| External | SyncReqResp | Native | External-SyncReqResp-Native | Native-External | |

| External | AsyncReqResp | Manual | External-AsyncReqResp-Manual | Manual-External | |

| External | AsyncReqResp | Middleware | External-AsyncReqResp-Middleware | Middleware-External | |

| External | AsyncReqResp | APIM | External-AsyncReqResp-APIM | API-External | |

| External | AsyncReqResp | ETL | External-AsyncReqResp-ETL | ETL-External | May need to be used in conjuction with another component like Secure File Transfer to mange Batch Data |

| External | AsyncReqResp | CDC | External-AsyncReqResp-CDC | N/A | Not valid for asynchronous request reply, consider middleware |

| External | AsyncReqResp | FileTransfer | External-AsyncReqResp-FileTransfer | FileTransfer-External | |

| External | AsyncReqResp | MDM | External-AsyncReqResp-MDM | MDM-External | |

| External | AsyncReqResp | ESP | External-AsyncReqResp-ESP | ESP-External | |

| External | AsyncReqResp | Workflow | External-AsyncReqResp-Workflow | Workflow-External | |

| External | AsyncReqResp | AIML | External-AsyncReqResp-AIML | AIML-External | |

| External | AsyncReqResp | Native | External-AsyncReqResp-Native | Native-External | |

| External | PubSub | Manual | External-PubSub-Manual | Manual-External | |

| External | PubSub | Middleware | External-PubSub-Middleware | Middleware-External | |

| External | PubSub | APIM | External-PubSub-APIM | API-External | |

| External | PubSub | ETL | External-PubSub-ETL | N/A | Not valid for publish subscribe interactions, consider middleware or CDC |

| External | PubSub | CDC | External-PubSub-CDC | CDC-External | |

| External | PubSub | FileTransfer | External-PubSub-FileTransfer | N/A | Not valid for publish subscribe interactions |

| External | PubSub | MDM | External-PubSub-MDM | MDM-External | |

| External | PubSub | ESP | External-PubSub-ESP | ESP-External | |

| External | PubSub | Workflow | External-PubSub-Workflow | Workflow-External | |

| External | PubSub | AIML | External-PubSub-AIML | AIML-External | |

| External | PubSub | Native | External-PubSub-Native | Native-External | |

| External | BatchData | Manual | External-BatchData-Manual | Manual-External | |

| External | BatchData | Middleware | External-BatchData-Middleware | Middleware-External | |

| External | BatchData | APIM | External-BatchData-APIM | API-External | API must be designed carefully when dealing with large data sets, consider paging the data and throttling limits |

| External | BatchData | ETL | External-BatchData-ETL | ETL-External | |

| External | BatchData | CDC | External-BatchData-CDC | CDC-External | Consider small batches |

| External | BatchData | FileTransfer | External-BatchData-FileTransfer | FileTransfer-External | |

| External | BatchData | MDM | External-BatchData-MDM | MDM-External | |

| External | BatchData | ESP | External-BatchData-ESP | ESP-External | Event stream is not valid for large data sets the data needs to either be broken up or references to the location of the passed |

| External | BatchData | Workflow | External-BatchData-Workflow | Workflow-External | |

| External | BatchData | AIML | External-BatchData-AIML | AIML-External | |

| External | BatchData | Native | External-BatchData-Native | Native-External | |

| Cloud | SyncReqResp | Manual | Cloud-SyncReqResp-Manual | N/A | Not valid for synchronous interactions |

| Cloud | SyncReqResp | Middleware | Cloud-SyncReqResp-Middleware | Middleware-Cloud | |

| Cloud | SyncReqResp | APIM | Cloud-SyncReqResp-APIM | Native-Cloud | |

| Cloud | SyncReqResp | ETL | Cloud-SyncReqResp-ETL | N/A | Not valid for synchronous interactions |

| Cloud | SyncReqResp | CDC | Cloud-SyncReqResp-CDC | N/A | Not valid for synchronous interactions |

| Cloud | SyncReqResp | FileTransfer | Cloud-SyncReqResp-FileTransfer | N/A | Not valid for synchronous interactions |

| Cloud | SyncReqResp | MDM | Cloud-SyncReqResp-MDM | MDM-Cloud | |

| Cloud | SyncReqResp | ESP | Cloud-SyncReqResp-ESP | ESP-Cloud | Asynchronous processing is preferred for ESP, a synchronous API using middleware may be better solution |

| Cloud | SyncReqResp | Workflow | Cloud-SyncReqResp-Workflow | Workflow-Cloud | |

| Cloud | SyncReqResp | AIML | Cloud-SyncReqResp-AIML | AIML-External | |

| Cloud | SyncReqResp | Native | Cloud-SyncReqResp-Native | Native-Cloud | |

| Cloud | AsyncReqResp | Manual | Cloud-AsyncReqResp-Manual | Manual-Cloud | |

| Cloud | AsyncReqResp | Middleware | Cloud-AsyncReqResp-Middleware | Middleware-Cloud | |

| Cloud | AsyncReqResp | APIM | Cloud-AsyncReqResp-APIM | Native-Cloud | |

| Cloud | AsyncReqResp | ETL | Cloud-AsyncReqResp-ETL | ETL-Cloud | |

| Cloud | AsyncReqResp | CDC | Cloud-AsyncReqResp-CDC | N/A | Not valid for asynchronous request reply, consider middleware |

| Cloud | AsyncReqResp | FileTransfer | Cloud-AsyncReqResp-FileTransfer | FileTransfer-Cloud | |

| Cloud | AsyncReqResp | MDM | Cloud-AsyncReqResp-MDM | MDM-Cloud | |

| Cloud | AsyncReqResp | ESP | Cloud-AsyncReqResp-ESP | ESP-Cloud | |

| Cloud | AsyncReqResp | Workflow | Cloud-AsyncReqResp-Workflow | Workflow-Cloud | |

| Cloud | AsyncReqResp | AIML | Cloud-AsyncReqResp-AIML | AIML-External | |

| Cloud | AsyncReqResp | Native | Cloud-AsyncReqResp-Native | Native-Cloud | |

| Cloud | PubSub | Manual | Cloud-PubSub-Manual | Manual-Cloud | |

| Cloud | PubSub | Middleware | Cloud-PubSub-Middleware | Middleware-Cloud | |

| Cloud | PubSub | APIM | Cloud-PubSub-APIM | Native-Cloud | |

| Cloud | PubSub | ETL | Cloud-PubSub-ETL | N/A | Not valid for publish subscribe interactions, consider middleware or CDC |

| Cloud | PubSub | CDC | Cloud-PubSub-CDC | CDC-Cloud | |

| Cloud | PubSub | FileTransfer | Cloud-PubSub-FileTransfer | N/A | Not valid for publish subscribe interactions |

| Cloud | PubSub | MDM | Cloud-PubSub-MDM | MDM-Cloud | |

| Cloud | PubSub | ESP | Cloud-PubSub-ESP | ESP-Cloud | |

| Cloud | PubSub | Workflow | Cloud-PubSub-Workflow | Workflow-Cloud | |

| Cloud | PubSub | AIML | Cloud-PubSub-AIML | AIML-External | |

| Cloud | PubSub | Native | Cloud-PubSub-Native | Native-Cloud | |

| Cloud | BatchData | Manual | Cloud-BatchData-Manual | Manual-Cloud | |

| Cloud | BatchData | Middleware | Cloud-BatchData-Middleware | Middleware-Cloud | |

| Cloud | BatchData | APIM | Cloud-BatchData-APIM | Native-Cloud | API must be designed carefully when dealing with large data sets, consider paging the data and throttling limits |

| Cloud | BatchData | ETL | Cloud-BatchData-ETL | ETL-Cloud | |

| Cloud | BatchData | CDC | Cloud-BatchData-CDC | CDC-Cloud | Consider small batches |

| Cloud | BatchData | FileTransfer | Cloud-BatchData-FileTransfer | FileTransfer-Cloud | |

| Cloud | BatchData | MDM | Cloud-BatchData-MDM | MDM-Cloud | |

| Cloud | BatchData | ESP | Cloud-BatchData-ESP | ESP-Cloud | Event stream is not valid for large data sets the data needs to either be broken up or references to the location of the passed |

| Cloud | BatchData | Workflow | Cloud-BatchData-Workflow | Workflow-Cloud | |

| Cloud | BatchData | AIML | Cloud-BatchData-AIML | AIML-External | |

| Cloud | BatchData | Native | Cloud-BatchData-Native | Native-Cloud |

Solution Pattern Catalogue

This section contains the details of the solution patterns, each pattern provides details such as:

- a problem description of he challenge that needs to be solved.

- a description of the solution.

- guidelines that describe how the solution should be implemented on the integration platform components.

- guidelines that describe how security should be enforced.

- a diagram showing the flow through platform components.

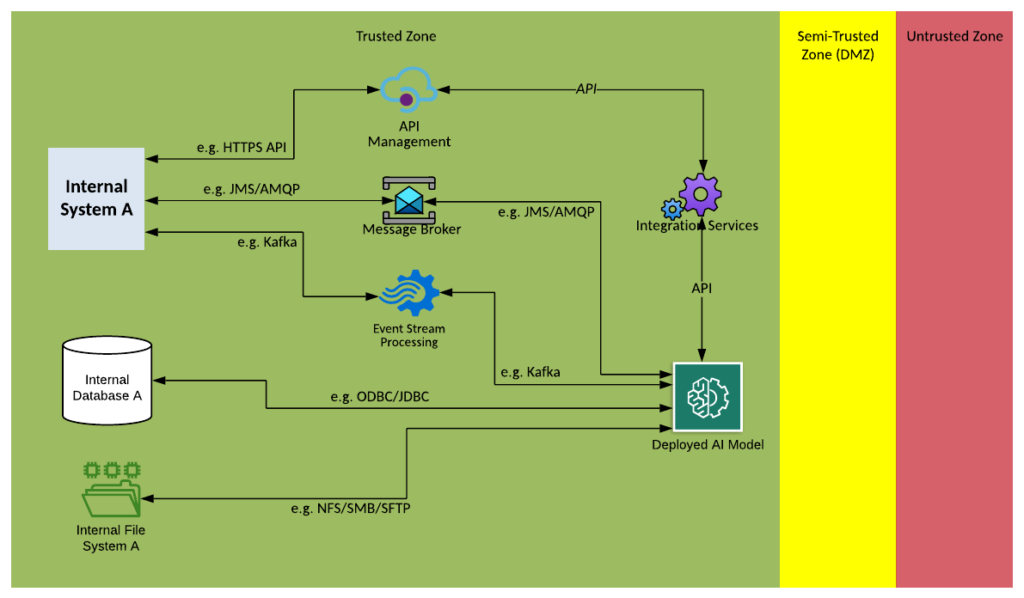

The patterns consider commonly used security zones as follows:

- Trusted Zone – is a zone where the organisation has full control this is typically where business applications and data are hosted.

- Semi-Trusted (DMZ) – is a typical Demilitarised zone between the Untrusted zone and the Trusted zone where external connections are terminated on a gateway or proxy, the connections is validated before a new connection is created going into the trusted zone. Semi-Trusted zones may also be used for networks controlled by another organisation on behalf of the organisation e.g. cloud SaaS application.

- Untrusted Zone – is a zone where the organisation has no little to control like the internet.

Solution Pattern Name: Manual-Internal

| Solution Pattern Name: | Manual-Internal |

| Problem: | Data needs to be shared between internal applications on an infrequent basis and the cost of an automated integration cannot be justified. |

| Solution: | Document and execute a manual process where a user manually extracts data from one application and uploads it into another |

| Solution Guidelines: | The frequency of the integration must be incredibly low e.g., weekly, monthly, yearly task, anything more regular may be missed. |

| Security Guidelines: | The users who are executing the task must have access to both application this may require opening firewalls.For more sensitive data consider running the task from a virtual desktop that only has access by specific users and to the applications |

| Context Diagram: | See diagram below |

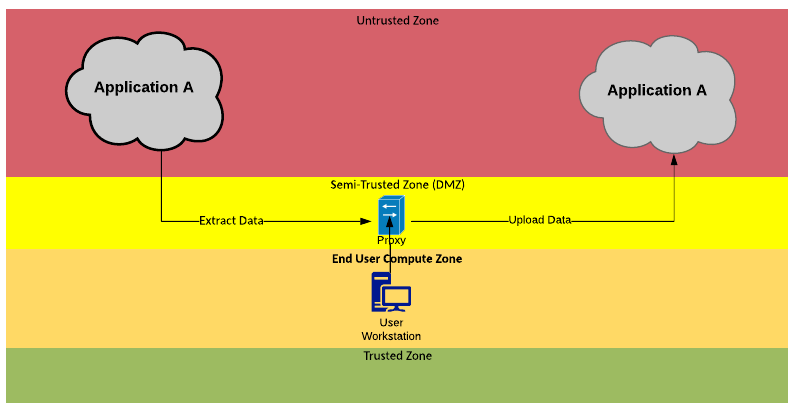

Solution Pattern Name: Manual-External

| Solution Pattern Name: | Manual-External |

| Problem: | Data needs to be shared between an internal and external application on an infrequent basis and the cost of an automated integration cannot be justified. |

| Solution: | Document and execute a manual process where a user manually extracts data from one application and uploads it into another. |

| Solution Guidelines: | The frequency of the integration must be incredibly low e.g., weekly, monthly, yearly task, anything more regular may be missed. |

| Security Guidelines: | The users who are executing the task must have access to both application this may require opening firewalls and configuring a proxy server out to the external application.For more sensitive data consider running the task from a virtual desktop that only has access by specific users and to the applications. |

| Context Diagram: | See diagram below |

Solution Pattern Name: Manual-Cloud

| Solution Pattern Name: Manual-Cloud | Problem: Data needs to be shared between two external applications on an infrequent basis and the cost of an automated integration cannot be justified. |

| Solution: | Document and execute a manual process where a user manually extracts data from one application and uploads it into another. |

| Solution Guidelines: | The frequency of the integration must be incredibly low e.g., weekly, monthly, yearly task, anything more regular may be missed. |

| Security Guidelines: | The users who are executing the task must have access to both application this may require opening firewalls and configuring a proxy server out to the external applications.For more sensitive data consider running the task from a virtual desktop that only has access by specific users and to the applications. Ideally the user desktop connects over a dedicated connection to the cloud provider and not over the internet e.g., Direct Connect, Express Route, Dedicated Interconnect |

| Context Diagram: | See diagram below |

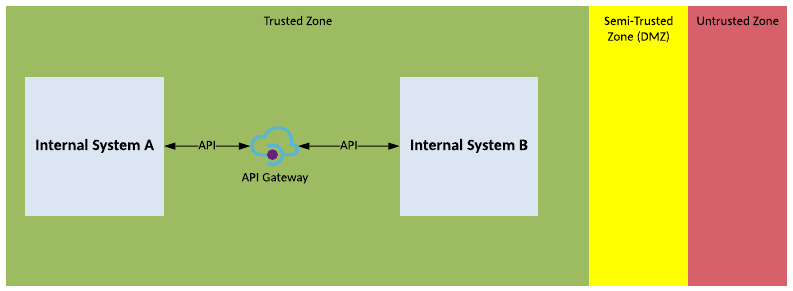

Solution Pattern Name: API-Internal

| Solution Pattern Name: | API-Internal |

| Problem: | An internal application needs to share data or a service with an internal user or system in real-time. |

| Solution: | Deploy a configuration on the API Gateway to proxy the connection from internal system or user to the internal system that provides the data or service. |

| Solution Guidelines: | Implement configuration on the API Gateway that authenticates, authorises, and throttles connections to the internal systemThe internal system providing the API endpoint will:Use the REST or SOAP over HTTPSDescribe the API using standard description specifications: Open API Specification (OAS), Web Service Description Language (WSDL)Use standard data formats such as JSON and XMLInformation objects exchanged should be based on common vocabulary or industry standard formatsThe Interface must be documentedThe organisation should define API standards to ensure APIs are presented consistently to consumersIf the APIs exposed by the provider system require data transformations or orchestration of several API calls into a single service, consider using Middleware-Internal pattern. |

| Security Guidelines: | Enable transport layer security on the API endpoint exposed to the consumerAll connection must be made via the API gateway to enforce authentication, authorisation and throttling policies on inbound requestsUse OAuth, API Keys, or mutual TLS certificates to authenticate consumers of the serviceUse API Management to enforce authentication, authorisation and throttling policies on API requests |

| Context Diagram: | See diagram below |

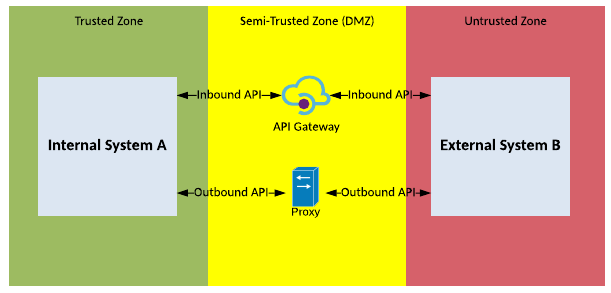

Solution Pattern Name: API-External

| Solution Pattern Name: | API-External |

| Problem: | An internal application needs to share data or a service with an external user or system in real-time. |

| Solution: | For incoming APIs, deploy a configuration on the API Gateway to proxy the connection from external system or user to the internal system that provides the data or service. For outbound API deploy a configuration on a forward proxy to enable connectivity to the external provider. |

| Solution Guidelines: | Implement configuration on the API Gateway that authenticates, authorises, and throttles connections to the internal systemThe internal system providing the API endpoint will:Use the REST or SOAP over HTTPSDescribe the API using standard description specifications: Open API Specification (OAS), Web Service Description Language (WSDL)Use standard data formats such as JSON and XMLInformation objects exchanged should be based on common vocabulary or industry standard formatsThe Interface must be documentedThe organisation should define API standards to ensure APIs are presented consistently to consumersIf the APIs exposed by the provider system require data transformations or orchestration of several API calls into a single service, consider using Middleware-External pattern.If the internal application initiates the connection, then the outbound connection must connect via a forward proxy. |

| Security Guidelines: | Inbound connections must be made via the API gateway in the DMZEnable transport layer security on the API endpointUse API Keys or digital certificate to authenticate consumers of the serviceUse API Management to enforce authentication, authorisation and throttling policies on inbound requests.For outbound connections configure the forward proxy and firewall to allow connections from the internal application to the external application. |

| Context Diagram: | See diagram below |

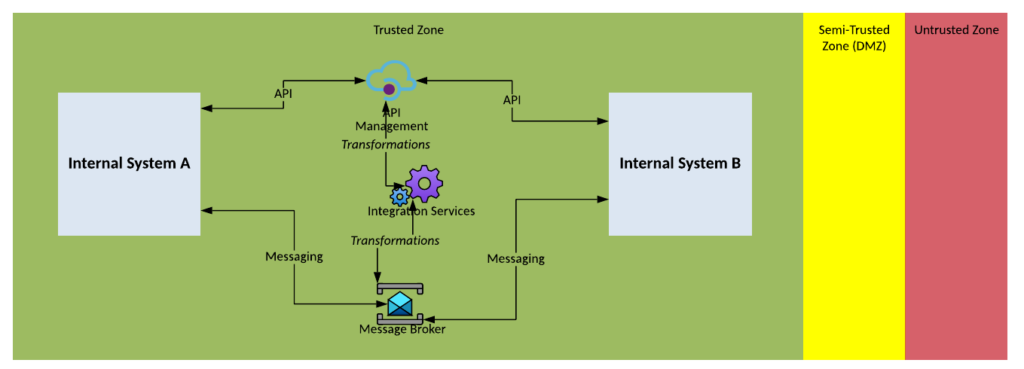

Solution Pattern Name: Middleware-Internal

| Solution Pattern Name: | Middleware-Internal |

| Problem: | An internal system is interested in consuming data from another internal system in real-time |

| Solution: | Deploy a real-time service on the Middleware Services component which can connect interested consumers with a data provider over a variety of protocols and data formats |

| Solution Guidelines: | Implement an Integration Service using Middleware Services such as a message broker or an Integration ServiceUse standard protocols such as HTTPS, AMQP, MQTTUse common data formats such as JSON or XMLInformation objects exchanged should be based on common vocabularyThe Interface must be documented ideally using common specification languages such as Open API Specification (OAS) and Web Service Description Language (WSDL) |

| Security Guidelines: | Enable transport layer security on all interfacesUse OAuth, API Keys, mutual TLS certificates, username/password to authenticate consumers of the serviceIf the Integration Service is exposing an API use API Management to enforce security and performance policies on API requests |

| Context Diagram: | See diagram below |

Solution Pattern Name: Middleware-External

| Solution Pattern Name: | Middleware-External |

| Problem: | An internal system needs to exchange data in real-time with an external system. The exchange may be inbound or outbound. |

| Solution: | Deploy a real-time service on the Middleware Services component which can connect interested consumers with a data provider over a variety of protocols and data formats For inbound APIs deploy a configuration on the API Gateway to make the connection between the Middleware Services and the external systemFor outbound API deploy a configuration on a forward proxy to enable connectivity to the external provider.For interfaces that use messaging deploy a message broker in the DMZ to connect with the external system |

| Solution Guidelines: | Implement an Integration Service using Middleware Services such as a message broker or an Integration ServiceUse standard protocols such as HTTPS, AMQP, MQTTUse common data formats such as JSON or XMLInformation objects exchanged should be based on common vocabularyInterfaces should be documented using common specification languages such as Open API Specification (OAS) and Web Service Description Language (WSDL) |

| Security Guidelines: | Inbound connections must be made via the API gateway in the DMZEnable transport layer security on the API endpointUse API Keys or digital certificate to authenticate consumers of the serviceUse API Management to enforce authentication, authorisation and throttling policies on inbound requests.For outbound connections configure the forward proxy and firewall to allow connections from the internal application to the external application.For message broker connections the firewall must whitelist the clients IP address and client certificate. The message broker in the DMZ will exchange messages with the message broker in the trusted network. External clients must not directly connect to the internal message broker. |

| Context Diagram: | See diagram below |

Solution Pattern Name: Middleware-Cloud

| Solution Pattern Name: | Middleware-Cloud |

| Problem: | Two external systems need to exchange data in real-time and a native solution is not available |

| Solution: | Deploy a real-time service on the Middleware Services component which can connect the two systems |

| Solution Guidelines: | Implement an Integration Service using Middleware Services such as a message broker or an Integration Service|Use standard protocols such as HTTPS, AMQP, MQTTUse common data formats such as JSON or XMLInformation objects exchanged should be based on common vocabulary Interfaces should be documented using common specification languages such as Open API Specification (OAS) and Web Service Description Language (WSDL)Consider deploying the solution on components deployed in the cloud as part of a hybrid integration platform. |

| Security Guidelines: | Inbound connections must be made via the API gateway in the DMZEnable transport layer security on the API endpointUse API Keys or digital certificate to authenticate consumers of the serviceUse API Management to enforce authentication, authorisation and throttling policies on inbound requests.For outbound connections configure the forward proxy and firewall to allow connections to the external application. For message broker connections the firewall must whitelist the clients IP address and client certificate. The message broker in the DMZ will exchange messages with the message broker in the trusted network. External clients must not directly connect to the internal message broker.Consider dedicated connection to the cloud provider and not over the internet e.g., Direct Connect, Express Route, Dedicated Interconnect |

| Context Diagram: | See diagram below |

Solution Pattern Name: FileTransfer-Internal

| Solution Pattern Name: | FileTransfer-Internal |

| Problem: | An internal system needs to exchange files with another internal system |

| Solution: | Configure a file transfer on the Secure File Transfer component that will automatically transfer the file from the data provider to the interested consumers or allow them to pick up the file when required |

| Solution Guidelines: | Configure the internal system credentials on the Secure File Transfer componentImplement a file transfer configuration on the Secure File Transfer componentUse standard protocols such as SFTPFile formats should be agreed between consumers and with a preference for industry standard formatsThe Interface must be documented including account names, directory structure, file naming, file formats, scheduleMiddleware Services or ETL components should be used to perform transformations if required |

| Security Guidelines: | Enable transport layer security on consumers and providers connectionsUse credentials or SSH keys to authenticate consumers and providersFiles should be scan for malware during transferringThe FTP protocol must not be used as it sends credentials in clear text |

| Context Diagram: | See diagram below |

Solution Pattern Name: FileTransfer-External

| Solution Pattern Name: | FileTransfer-External |

|---|---|

| Problem: | An internal system needs to exchange files with an external party. |

| Solution: | Configure a file transfer on the Secure File Transfer server component that will transfer the file between the internal system and the external party. |

| Solution Guidelines: | Configure the external party’s credentials on the Secure File Transfer server componentImplement a file transfer configuration on the Secure File Transfer server componentUse standard protocols such as SFTPFile formats should be agreed between consumers and with a preference for industry standard formatsThe Interface must be documented including account names, directory structure, file naming, file formats, scheduleMiddleware Services or ETL components should be used to perform transformations if required |

| Security Guidelines: | Enable transport layer security on consumers and providers connectionsUse credentials or SSH keys to authenticate consumers and providersFiles should be scan for malware during transferring especially files coming from an external systemThe FTP protocol must not be used as it sends credentials in clear textA Secure File Transfer Gateway must be used in the DMZ to authenticate users before files can be transferred to the internal server componentFile should not be stored in the DMZ zone |

| Context Diagram: | See diagram below |

Solution Pattern Name: FileTransfer-Cloud

| Solution Pattern Name: | FileTransfer-Cloud |

|---|---|

| Problem: | Two external systems need to exchange files and there is no native solution available. |

| Solution: | Configure a file transfer on the Secure File Transfer server component that will transfer the file between the external systems. |

| Solution Guidelines: | Configure the external parties’ credentials on the Secure File Transfer componentImplement a file transfer configuration on the Secure File Transfer componentUse standard protocols such as SFTPFile formats should be agreed between consumers and with a preference for industry standard formatsThe Interface must be documented including account names, directory structure, file naming, file formats, schedule Middleware Services or ETL components should be used to perform transformations if requiredConsider deploying the solution on components deployed in the cloud as part of a hybrid integration platform. |

| Security Guidelines: | Enable transport layer security on consumers and providers connectionsUse credentials or SSH keys to authenticate consumers and providers Files should be scan for malware during transferring especially files coming from an external systemThe FTP protocol must not be used as it sends credentials in clear textA Secure File Transfer Gateway must be used in the DMZ to authenticate users before files can be transferred to the internal server componentFile should not be stored in the DMZ zoneConsider dedicated connection to the cloud provider and not over the internet e.g., Direct Connect, Express Route, Dedicated Interconnect |

| Context Diagram: | See diagram below |

Solution Pattern Name: ESP-Internal

| Solution Pattern Name: | ESP-Internal |

| Problem: | An internal system needs to stream data to another interested internal system |

| Solution: | Deploy a topic and partitions for consumers to consumer messages that have been streamed |

| Solution Guidelines: | Configure credentials for the internal applications on the ESP componentConfigure a topic for the producer to publish messages toCreate queues or partitions for consumers to consumer messages fromOptionally create streaming APIs that process the topic data in real-time like aggregating messages on a time windows or joining data and publishes it to another topicUse a common streaming protocol like KafkaInformation objects exchanged should be based on common vocabulary The Interface must be documented use standards like CloudEvemts, OpenTelemetry, Aysncapi |

| Security Guidelines: | Enable transport layer security on all connectionsConfigure credentials or mutual certificates to authenticate producers and consumers |

| Context Diagram: | See diagram below |

Solution Pattern Name: ESP-External

| Solution Pattern Name: | ESP-External |

| Problem: | An internal system needs to stream data to an external system and vice versa |

| Solution: | Deploy an ESP topic for the producer system to publish messages to and deploy a queue/partition for the consumer system to consumer messages via a proxy in the DMZ |

| Solution Guidelines: | Configure credentials for the systems on the ESP component Configure a topic for the producer to publish messages to Create queues/partitions for consumer system to consumer messages from Optionally create streaming APIs that process the topic data in real-time like aggregating messages on a time windows or joining data and publishes it to another topic Use a common streaming protocol like Kafka Information objects exchanged should be based on common vocabulary The Interface must be documented consider the following specification CloudEvents, OpenTelemetry, AysncAPI |

| Security Guidelines: | Enable transport layer security on all connections Configure credentials to authenticate producers and consumers Consider using client certificates for external parties Whitelist the client IPs on the firewall A proxy in the DMZ will proxy connections through to the server in the trusted zone, external clients must not directly connect to the internal ESP server. |

| Context Diagram: | See diagram below |

Solution Pattern Name: ESP-Cloud

| Solution Pattern Name: | ESP-Cloud |

| Problem: | Two external system needs to stream data to each other an no native solution exists |

| Solution: | Deploy an ESP topic for the producer system to publish messages to and deploy a queue/partition for the consumer system to consumer messages via a proxy in the DMZ |

| Solution Guidelines: | Configure credentials for the systems on the ESP componentConfigure a topic for the producer to publish messages toCreate queues/partitions for consumer system to consumer messages fromOptionally create streaming APIs that process the topic data in real-time like aggregating messages on a time windows or joining data and publishes it to another topicUse a common streaming protocol like KafkaInformation objects exchanged should be based on common vocabulary The Interface must be documented consider the following specification CloudEvents, OpenTelemetry, AysncAPIConsider deploying the solution on components deployed in the cloud as part of a hybrid integration platform. |

| Security Guidelines: | Enable transport layer security on all connectionsConfigure credentials to authenticate producers and consumers Consider using client certificates for external parties Whitelist the client IPs on the firewallA proxy in the DMZ will proxy connections through to the server in the trusted zone, external clients must not directly connect to the internal ESP server.Consider dedicated connection to the cloud provider and not over the internet e.g., Direct Connect, Express Route, Dedicated Interconnect |

| Context Diagram: | See diagram below |

Solution Pattern Name: ETL-Internal

| Solution Pattern Name: | ETL-Internal |

| Problem: | An internal system is interested in consuming a bulk data set from another internal system that has a low frequency of change but a high volume of data. |

| Solution: | Configure a job on the Extract Transform Load component that will extract the data from the source system, transform the data into the required format and load it into the target system. The load step can occur before the transform step for performance reasons. |

| Solution Guidelines: | Implement an ETL job on the Extract Transform Load componentThe job should be triggered at a scheduled time, by an event or manuallyError handling must be implemented to meet requirements e.g., restart at regular checkpoints, rollback on failure, save error records to a fileThe Interface must be documented |

| Security Guidelines: | Enable transport layer security on source and target connectionsDo not store credentials to datastores in cleartext. |

| Context Diagram: | See diagram below |

Solution Pattern Name: ETL-External

| Solution Pattern Name: | ETL-External |

| Problem: | An internal system is interested in exchanging a bulk data set with an external system that has a low frequency of change but a high volume of data. |

| Solution: | Configure a job on the Extract Transform Load component that will extract the data from the source system, transform the data into the required format and load it into the target system. The load step can occur before the transform step for performance reasons. Data exchange with an external system should be either via API calls or file transfers. |

| Solution Guidelines | Implement an ETL job on the Extract Transform Load componentThe job should be triggered at a scheduled time, by an event or manuallyError handling must be implemented to meet requirements e.g., restart at regular checkpoints, rollback on failure, save error records to a fileThe Interface must be documentedDirect database connections with external parties must be avoided, use API calls instead |

| Security Guidelines: | Enable transport layer security on source and target connectionsDo not store credentials to datastores in cleartext.The connection to the external system must be via a HTTP proxy or Secure File Transfer Gateway in the DMZIf using files its highly recommended that the FileTransfer-External pattern be followed |

| Context Diagram: | See diagram below |

Solution Pattern Name: ETL-Cloud

| Solution Pattern Name: | ETL-Cloud |

| Problem: | Two external systems are interested in exchanging a bulk data set with each other, the data set has a low frequency of change but a high volume of data and there is no native solution available. |

| Solution: | Configure a job on the Extract Transform Load component that will extract the data from the source system, transform the data into the required format and load it into the target system. The load step can occur before the transform step for performance reasons. Data exchange with an external system should be either via API calls or file transfers. |

| Solution Guidelines: | Implement an ETL job on the Extract Transform Load componentThe job should be triggered at a scheduled time, by an event or manuallyError handling must be implemented to meet requirements e.g., restart at regular checkpoints, rollback on failure, save error records to a fileThe Interface must be documentedDirect database connections with external parties must be avoided, use API calls instead Consider deploying the solution on components deployed in the cloud as part of a hybrid integration platform. |

| Security Guidelines | Enable transport layer security on source and target connectionsDo not store credentials to datastores in cleartext.The connection to the external system must be via a HTTP proxy or Secure File Transfer Gateway in the DMZIf using files its highly recommended that the FileTransfer-Cloud pattern be followed |

| Context Diagram: | See diagram below |

Solution Pattern Name: CDC-Internal

| Solution Pattern Name: | CDC-Internal |

| Problem: | An internal system is interested in consuming recently changed data from another internal system’s datastore without making changes to the system. |

| Solution: | Configure a job on the Change Data Capture component to monitor the source system’s datastore for changes and at regular intervals publishes the changes as either a message or file for other system to consume. |

| Solution Guidelines: | Implement a job on the Change Data Capture component to capture the changes that have occurred since the last trigger The job should be triggered at regular intervals e.g., every 15 minutes The changes should be published in an agreed format and protocol typically as either a message or a file Error handling must be implemented to meet requirements e.g., keep track of the last successful publish time, on outage limit the amount of data published to avoid overloading subscribers. The Interface must be documented |

| Security Guidelines: | Enable transport layer security on source and target connections Do not store credentials to datastores in cleartext. |

| Context Diagram: | See diagram below |

Solution Pattern Name: CDC-External

| Solution Pattern Name: | CDC-External |

| Problem: | An internal system is interested in exchanging recently changed data with an external system without making changes to the system. |

| Solution: | Configure a job on the Change Data Capture component to monitor the source system’s datastore for changes and at regular intervals publishes the changes as either a message or file for other system to consume. Data exchange with an external system should be either via API calls or file transfers. |

| Solution Guidelines: | Implement a job on the Change Data Capture component to capture the changes that have occurred since the last trigger The job should be triggered at regular intervals e.g., every 15 minutes The changes should be published in an agreed format and protocol typically as either a message or a file Error handling must be implemented to meet requirements e.g., keep track of the last successful publish time, on outage limit the amount of data published to avoid overloading subscribers. Direct database connections with external parties must be avoided, use API calls instead |

| Security Guidelines: | Enable transport layer security on source and target connections Do not store credentials to datastores in cleartext. The connection to the external system must be via a HTTP proxy, Secure File Transfer Gateway or Message Broker in the DMZ If using files its highly recommended that the FileTransfer-External pattern be followed |

| Context Diagram: | See diagram below |

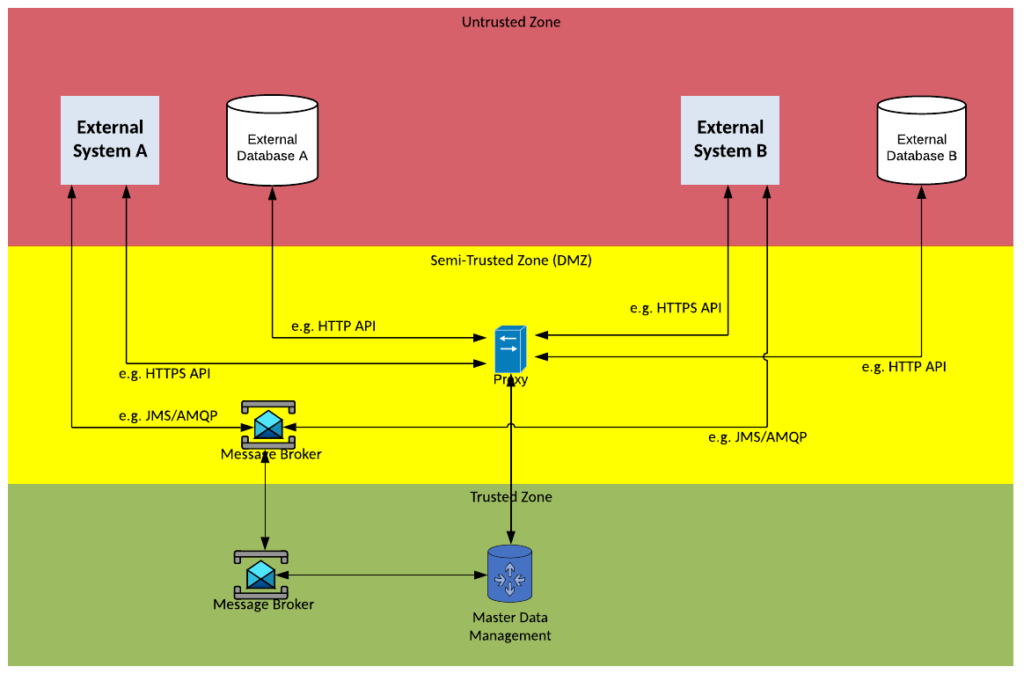

Solution Pattern Name: CDC-Cloud

| Solution Pattern Name: | CDC-Cloud |

| Problem: | Two external systems are interested in exchanging recently changed data with each other, there is no native solution available, and the organisations wants to avoid making changes to the system. |

| Solution: | Configure a job on the Change Data Capture component that will monitor the source system’s datastore for changes and at regular intervals publishes the changes as either a message or file for other system to consume. Data exchange with an external system should be either via API calls or file transfers. |

| Solution Guidelines: | Implement a job on the Change Data Capture component to capture the changes that have occurred since the last trigger The job should be triggered at regular intervals e.g., every 15 minutes The changes should be published in an agreed format and protocol typically as either a message or a file Error handling must be implemented to meet requirements e.g., keep track of the last successful publish time, on outage limit the amount of data published to avoid overloading subscribers. Direct database connections with external parties must be avoided, use API calls instead, this may limit the functionality that can be used in the CDC component with two external parties Consider deploying the solution on components deployed in the cloud as part of a hybrid integration platform. |

| Security Guidelines: | Enable transport layer security on source and target connections Do not store credentials to datastores in cleartext. The connection to the external system must be via a HTTP proxy, Secure File Transfer Gateway or Message Broker in the DMZ If using files its highly recommended that the FileTransfer-Cloud pattern be followed |

| Context Diagram: | See diagram below |

Solution Pattern Name: MDM-Internal

| Solution Pattern Name: | MDM-Internal |

| Problem: | Two internal systems need to keep their reference data synchronised with the organisation’s single source of truth for reference data. |

| Solution: | Configure the reference data set in the Master Data Management system. Depending on the style of MDM several interfaces will need to be created that may query, push, or synchronise the reference data in the MDM and downstream systems. The interfaces typically use direct database access, API calls or messaging. |

| Solution Guidelines: | Analyse the use of the master data entity’s use in the organisation and determine each system that creates and updates the entity. Configuring the master data entity in MDM solution. Depending on the style of MDM being adopted (Registry, Consolidation, Coexistence, Centralized) several interfaces will need to be created with downstream systems. Each interface may query, push, or synchronise the reference data Error handling must be implemented to meet requirements e.g., retry changes a number of times when external system are not available, survivorship rules need to be configured if some systems have better quality data than others and they make a conflicting change. |

| Security Guidelines: | Enable transport layer security on source and target connections. Do not store credentials to datastores in cleartext. |

| Context Diagram: | See diagram below |

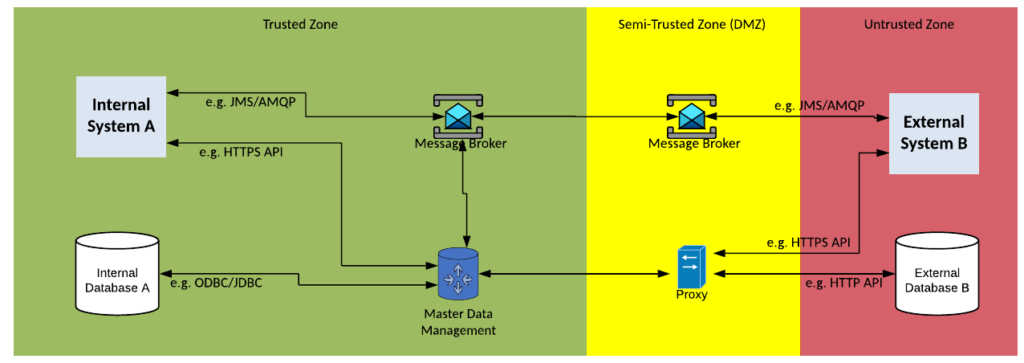

Solution Pattern Name: MDM-External

| Solution Pattern Name: | MDM-External |

| Problem: | An internal and external system need to keep their reference data synchronised with the organisation single source of truth for reference data. |

| Solution: | Configure the reference data set in the Master Data Management system. Depending on the style of MDM several interfaces will need to be created that may query, push, or synchronise the reference data in the MDM and downstream systems. External systems should be limited to API calls or messaging. |

| Solution Guidelines: | Analyse the use of the master data entity’s use in the organisation and determine each system that creates and updates the entity. Configuring the master data entity in MDM solution. Depending on the style of MDM being adopted (Registry, Consolidation, Coexistence, Centralized) several interfaces will need to be created with downstream systems. Each interface may query, push, or synchronise the reference data. Error handling must be implemented to meet requirements e.g., retry changes several times when a system is not available, survivorship rules need to be configured if some systems have better quality data than others and they make a conflicting change. Direct database connections with external parties must be avoided, use API calls instead |

| Security Guidelines: | Enable transport layer security on source and target connections. Do not store credentials to datastores in cleartext. The connection to the external system must be via a HTTP proxy, or Message Broker in the DMZ |

| Context Diagram: | See diagram below |

Solution Pattern Name: MDM-Cloud

| Solution Pattern Name: | MDM-Cloud |

| Problem: | Two external system need to keep their reference data synchronised with the organisation single source of truth for reference data. |

| Solution: | Configure the reference data set in the Master Data Management system. Depending on the style of MDM several interfaces will need to be created that may query, push, or synchronise the reference data in the MDM and downstream systems. External systems should be limited to API calls or messaging. |

| Solution Guidelines: | Analyse the use of the master data entity’s use in the organisation and determine each system that creates and updates the entity. Configuring the master data entity in MDM solution. Depending on the style of MDM being adopted (Registry, Consolidation, Coexistence, Centralized) several interfaces will need to be created with downstream systems. Each interface may query, push, or synchronise the reference data. Error handling must be implemented to meet requirements e.g., retry changes several times when a system is not available, survivorship rules need to be configured if some systems have better quality data than others and they make a conflicting change. Direct database connections with external parties must be avoided, use API calls instead Consider deploying the solution on components deployed in the cloud as part of a hybrid integration platform. |

| Security Guidelines: | Enable transport layer security on source and target connections. Do not store credentials to datastores in cleartext. The connection to the external system must be via a HTTP proxy, or Message Broker in the DMZ |

| Context Diagram: | See diagram below |

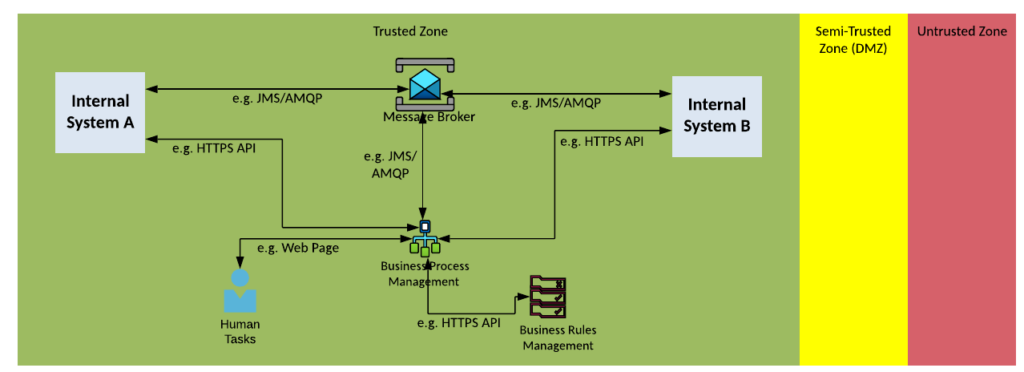

Solution Pattern Name: Workflow-Internal

| Solution Pattern Name: | Workflow-Internal |

| Problem: | A business process needs to be automated which includes interactions with one or more internal systems |

| Solution: | Configure a workflow on the Business Process Management component and decision logic on the Business Rules Management system. Configure Service tasks on the Business Process Management component to invoke downstream systems. |

| Solution Guidelines: | Config the workflow of steps to perform the business process on the Business Process Management tool Configure any decision logic on the Business Rules Management System If a business process needs to interact with an application, configure a Service Task that invokes the application via an API or sends a message to a Message Broker and waits for a response. Configure Web Forms or Email for Human tasks such as manual approvals Error handling must be implemented to meet requirements e.g., if a task is not completed in an acceptable time raise an alert to the support team, if a human has not responded escalate to their manager |

| Security Guidelines: | Enable transport layer security on all interfaces. Protect interfaces using credentials, API keys or certificates |

| Context Diagram: | See diagram below |

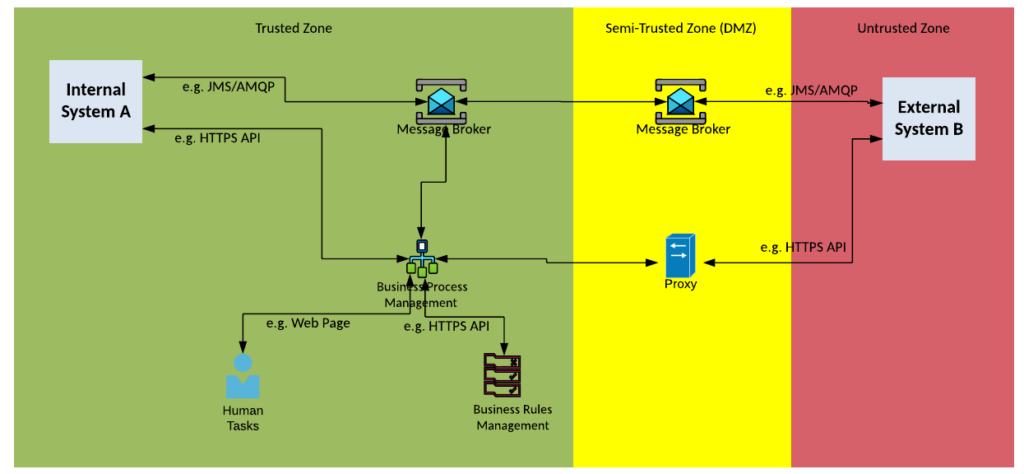

Solution Pattern Name: Workflow-External

| Solution Pattern Name: | Workflow-External |

| Problem: | A business process needs to be automated which includes interactions with one or more internal and external systems |

| Solution: | Configure a workflow on the Business Process Management component and decision logic on the Business Rules Management system. Configure Service tasks on the Business Process Management component to invoke downstream systems. |

| Solution Guidelines: | Config the workflow of steps to perform the business process on the Business Process Management tool Configure any decision logic on the Business Rules Management System If a business process needs to interact with an application, configure a Service Task that invokes the application via an API or sends a message to a Message Broker and waits for a response. Configure Web Forms or Email for Human tasks such as manual approvals Error handling must be implemented to meet requirements e.g., if a task is not completed in an acceptable time raise an alert to the support team, if a human has not responded escalate to their manager |

| Security Guidelines | Enable transport layer security on all interfaces. Protect interfaces using credentials, API keys or certificates The connection to the external system must be via a HTTP proxy, or Message Broker in the DMZ |

| Context Diagram: | See diagram below |

Solution Pattern Name: Workflow-Cloud

| Solution Pattern Name: | Workflow-Cloud |

| Problem: | A business process needs to be automated which includes interactions with one or more external systems |

| Solution: | Configure a workflow on the Business Process Management component and decision logic on the Business Rules Management system. Configure Service tasks on the Business Process Management component to invoke downstream systems. |

| Solution Guidelines: | Config the workflow of steps to perform the business process on the Business Process Management tool Configure any decision logic on the Business Rules Management System If a business process needs to interact with an application, configure a Service Task that invokes the application via an API or sends a message to a Message Broker and waits for a response. Configure Web Forms or Email for Human tasks such as manual approvals Error handling must be implemented to meet requirements e.g., if a task is not completed in an acceptable time raise an alert to the support team, if a human has not responded escalate to their manager Consider deploying the solution on components deployed in the cloud as part of a hybrid integration platform. |

| Security Guidelines: | Enable transport layer security on all interfaces. Protect interfaces using credentials, API keys or certificates The connection to the external system must be via a HTTP proxy, or Message Broker in the DMZ |

| Context Diagram: | See diagram below |

Solution Pattern Name: Native-Internal

| Solution Pattern Name: | Native-Internal |

| Problem: | An internal system is interested in consuming data from another internal system for which exists a native integration component |

| Solution: | Configure the native integration component on the internal application to integrate with the other application |

| Solution Guidelines: | Configure the native integration plugin in the application |

| Documented the interface and walk the integration support team through the interface in case they get calls related to the interface | |

| Security Guidelines: | Enable transport layer security on all interfaces Use OAuth, API Keys, mutual TLS certificates, username/password to secure the interface where possible Perform security testing on the interface to ensure it is secure |

| Context Diagram: | See diagram below |

Solution Pattern Name: Native-External

| Solution Pattern Name: | Native-External |

| Problem: | An internal system and external system are interested exchanging data for which exists a native integration component |

| Solution: | Configure the native integration component on the source application to integrate with the target application |

| Solution Guidelines: | Configure the native integration plugin in the application Documented the interface and walk the integration support team through the interface in case they get calls related to the interface |

| Security Guidelines: | Enable transport layer security on all interfaces Use OAuth, API Keys, mutual TLS certificates, username/password to secure the interface where possible The connection to the external system must be via a HTTP proxy in the DMZ Perform security testing on the interface to ensure it is secure |

| Context Diagram: | See diagram below |

Solution Pattern Name: Native-Cloud

| Solution Pattern Name: | Native-Cloud |

| Problem: | Two external systems are interested exchanging data for which exists a native integration component |

| Solution: | Configure the native integration component on the source application to integrate with the target application |

| Solution Guidelines: | Configure the native integration plugin in the application Documented the interface and walk the integration support team through the interface in case they get calls related to the interface |

| Security Guidelines: | Enable transport layer security on all interfaces Use OAuth, API Keys, mutual TLS certificates, username/password to secure the interface where possible Perform security testing on the interface to ensure it is secure |

| Context Diagram: | See diagram below. |

Solution Pattern Name: AIML-Internal

| Solution Pattern Name: | AIML-Internal |

|---|---|

| Problem: | A Machine Learning model needs to be trained on data from an internal data source before it is deployed for use. |

| Solution: | Capture the data from the internal data source. Prepare the data for use by the model by validating and extracting the required information Train and test the model using the data prepared Deploy the model and inference it with data passing through the integration layer to gain predictions |

| Solution Guidelines: | Capture the data from the internal data source. API calls can be accepted by the API Management and processed by Middleware Services Message can be accepted by Message Broker and processed by Middleware Services. Bulk extracts out of a database can be captured by ETL. Files can be captured by the Secure File Transfer component and processed by Middleware Services or ETL. High Volume streams of messages can be accepted by the Event Stream Processing component Prepare the data for use by the model by validating and extracting the required information The data can be processed by the Middleware Services or ETL after being captured A script or program in any language can also be used here Train and test the model using the data prepared This is dependent on how the model prefers its source data Data can be made available as files, messages in a Message Broker or Event Stream Processing component Deploy the model and inference it with data passing through the integration layer to gain predictions This is dependent on how the model works whether its run real-time or over batches of data Real-time models can be inference as part of a Middleware Service triggered by an API call or Message Batch models can be triggered by a schedule or event and directly run over data in files or in a database |

| Security Guidelines: | Enable transport layer security on all interfaces Use credentials, tokens, or certificates to authenticate consumers and providers Do not store credentials to datastores in cleartext. |

| Context Diagram: | See the three context diagrams below: 1. Capture the data from the internal data source, Prepare the data for use by the model by validating and extracting the required information. 2. Train and test the model using the data prepared. 3. Deploy the model and inference it with data passing through the integration layer to gain predictions. |

1. Capture the data from the internal data source, Prepare the data for use by the model by validating and extracting the required information.

2. Train and test the model using the data prepared.

3. Deploy the model and inference it with data passing through the integration layer to gain predictions.

Solution Pattern Name: AIML-External

| Solution Pattern Name: | AIML-External |

| Problem: | A Machine Learning model needs to be trained on data from an external or cloud based data source before it is deployed for use. |

| Solution: | Capture the data from the external or cloud based data source. Prepare the data for use by the model by validating and extracting the required information Train and test the model using the data prepared Deploy the model and inference it with data passing through the integration layer to gain predictions |

| Solution Guidelines: | Capture the data from the external data source. API calls can be accepted by the API Management and processed by Middleware Services Message can be accepted by Message Broker and processed by Middleware Services. Bulk extracts out of a database can be captured by ETL. Files can be captured by the Secure File Transfer component and processed by Middleware Services or ETL. High Volume streams of messages can be accepted by the Event Stream Processing component Prepare the data for use by the model by validating and extracting the required information The data can be processed by the Middleware Services or ETL after being captured A script or program in any language can also be used here Train and test the model using the data prepared This is dependent on how the model prefers its source data Data can be made available as files, messages in a Message Broker or Event Stream Processing component Deploy the model and inference it with data passing through the integration layer to gain predictions This is dependent on how the model works whether its run real-time or over batches of data Real-time models can be inference as part of a Middleware Service triggered by an API call or Message Batch models can be triggered by a schedule or event and directly run over data in files or in a database |

| Security Guidelines: | Enable transport layer security on all interfaces Use credentials, tokens, or certificates to authenticate consumers and providers Do not store credentials to datastores in cleartext. Direct database connections with external parties must be avoided, use API calls instead The connection to the external system must be via a HTTP proxy, Message Broker or Secure File Transfer Gateway in the DMZ |

| Context Diagram: | See the three context diagrams below: 1. Capture the data from the external data source, Prepare the data for use by the model by validating and extracting the required information. 2. Train and test the model using the data prepared. 3. Deploy the model and inference it with data passing through the integration layer to gain predictions. |

1. Capture the data from the external data source, Prepare the data for use by the model by validating and extracting the required information.

2. Train and test the model using the data prepared.

3. Deploy the model and inference it with data passing through the integration layer to gain predictions.